Stanford CME295 Transformers & LLMs | Autumn 2025 | Lecture 5 - LLM tuning

For more information about Stanford’s graduate programs, visit: https://online.stanford.edu/graduate-education

October 31, 2025

This lecture covers:

• Preference tuning

• RLHF overview

• Reward modeling

• RL approaches (PPO and variants)

• DPO

To follow along with the course schedule and syllabus, visit: https://cme295.stanford.edu/syllabus/

Chapters:

00:00:00 Introduction

00:04:50 Preference tuning

00:11:31 Data collection

00:17:43 RLHF overview

00:28:24 Reward model

00:29:46 Bradley-Terry formulation

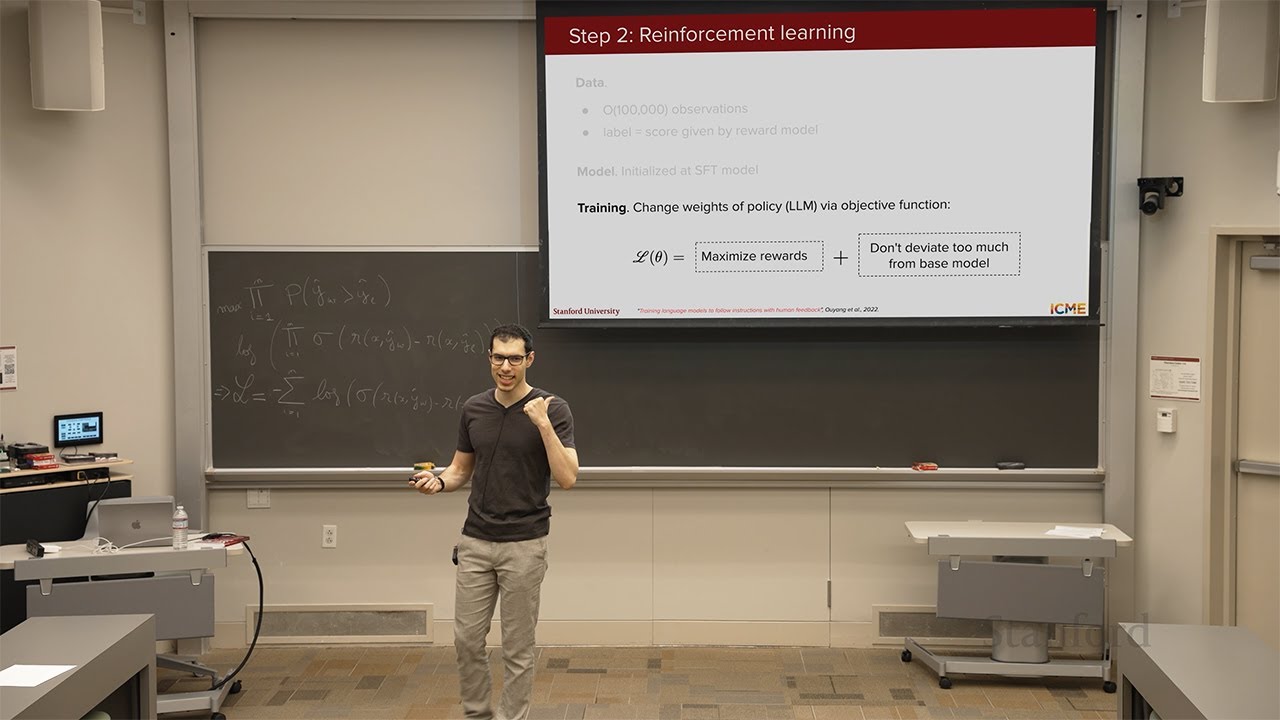

00:46:02 Reinforcement learning

00:53:54 PPO

00:57:56 Advantage, value function

01:03:39 PPO variants: Clip, KL-penalty

01:16:20 Challenges

01:19:58 On-policy vs off-policy

01:22:43 Best-of-N

01:29:47 DPO

Afshine Amidi is an Adjunct Lecturer at Stanford University.

Shervine Amidi is an Adjunct Lecturer at Stanford University.

October 31, 2025

This lecture covers:

• Preference tuning

• RLHF overview

• Reward modeling

• RL approaches (PPO and variants)

• DPO

To follow along with the course schedule and syllabus, visit: https://cme295.stanford.edu/syllabus/

Chapters:

00:00:00 Introduction

00:04:50 Preference tuning

00:11:31 Data collection

00:17:43 RLHF overview

00:28:24 Reward model

00:29:46 Bradley-Terry formulation

00:46:02 Reinforcement learning

00:53:54 PPO

00:57:56 Advantage, value function

01:03:39 PPO variants: Clip, KL-penalty

01:16:20 Challenges

01:19:58 On-policy vs off-policy

01:22:43 Best-of-N

01:29:47 DPO

Afshine Amidi is an Adjunct Lecturer at Stanford University.

Shervine Amidi is an Adjunct Lecturer at Stanford University.

Stanford Online

You can gain access to a world of education through Stanford Online, the Stanford School of Engineering’s portal for academic and professional education offered by schools and units throughout Stanford University. https://online.stanford.edu/

Our robust ...